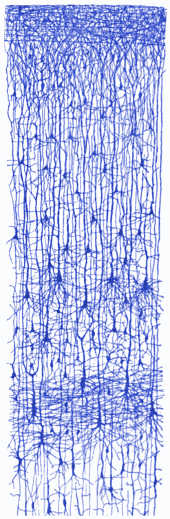

Drawing of cortex by Cajal

In the course "Brain-inspired computing", we give an introduction to biophysical models of nerve cells (neurons) and explore principles of computation and self-organization in biological and artificial neural networks.

Starting from the biophysics of ionic current flow across the cell membrane, we retrace the Nobel-prize winning work of Alan Hodgkin and Andrew Huxley to extract simplified, mathematically tractable models of neuronal input integration and the generation of neuronal responses, called action potentials. We study signaling between neurons via chemical synapses and analyze the firing response of leaky integrate-and-fire neurons to spatio-temporal input from other network neurons. Since a description on the level of single action potentials turns out to become intractable in larger networks, we employ statistical methods, such as Fokker-Planck equations, auto- and cross-correlation functions and dynamical systems theory, in order to characterize network evolution and neural coding. We then turn to the wide field of neural plasticity, that is, the brain's fascinating ability of self-organized adaptation and learning. We review experimental findings on neural plasticity, functional plasticity models and emergent computational capabilities of neural networks with a particular focus on short-term and long-term synaptic plasticity. We touch on the design of artificial physical implementations of neurons and synapses in micro-electronic circuitry, enabling the development of novel high-performance neuro-inspired computing platforms. Finally, we examine deep conceptual similarities between information processing and learning in spiking neural networks on the one hand, and principles of Bayesian computation and machine learning on the other, by the examples of multilayer perceptron networks and Boltzmann machines.

A recurring mathematical challenge in computational neuroscience, that will also accompany us throughout the course, is the development of a consistent mathematical description across multiple levels of abstraction, in order to find a balance between biophysical accuracy and analytical tractability of a complex system. To master this challenge, students are expected to be familiar with calculus and linear algebra. For computer simulations, which take a significant share of the exercises, at least basic knowledge in the Python programming language is strongly recommended.

Johannes Bill (Lecturer), Karlheinz Meier (Mentor), Andreas Hartel (Obertutor), Dominik Dold (Tutor), Akos Ferenc Kungl (Tutor).

Class material (Moodle) and LSF listing.

Book Petrovici, "Form Versus Function: Theory and Models for Neuronal Substrates" (free from university network)

Book Gerstner et al., "Neuronal Dynamics: From single neurons to networks and models of cognition" (free online version)

The exercises will include Python programming. If you are not familiar with Python, we have assembled a short tutorial (with solutions) for self-study prior to the semester. Further questions on Python and the tutorial can be answered during the first exercise group in April.

In the seminar "Brain-inspired computing", we inspect selected topics from computational neuroscience, brain-inspired learning theory and neuromorphic engineering in closer detail. Each week, one topic will be presented by a student in a 45-minutes talk with subsequent discussion. The seminar is complementary to the course (see above) and will not provide a comprehensive overview of the field on its own. It is thus recommended to also attend the lectures, even though the seminar is formally independent.

List of planned seminar talks:

Topics will be introduced and assigned to speakers during the first meeting on April 19, 2017 (after the lecture). For the talks, any combination of chalkboard presentation and powerpoint slides is admissible.

Karlheinz Meier (Mentor), Christoph Koke (Tutor), Andreas Baumbach (Tutor), Luziwei Leng (Tutor).

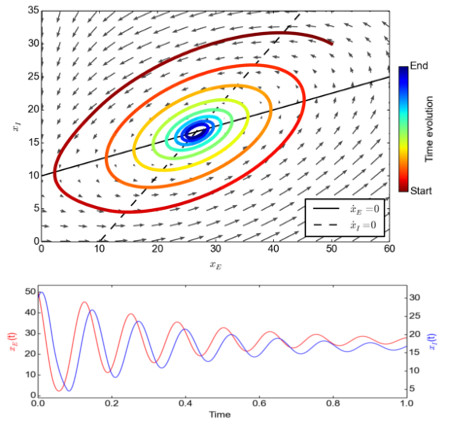

Phase plane analysis of two reciprocally connected neural populations

Electronic Visions Group – Prof. Dr. Johannes Schemmel

Im Neuenheimer Feld 225a

69120 Heidelberg

Germany

phone: +49 6221 549849

fax: +49 6221 549839

email: schemmel(at)kip.uni-heidelberg.de

How to find us