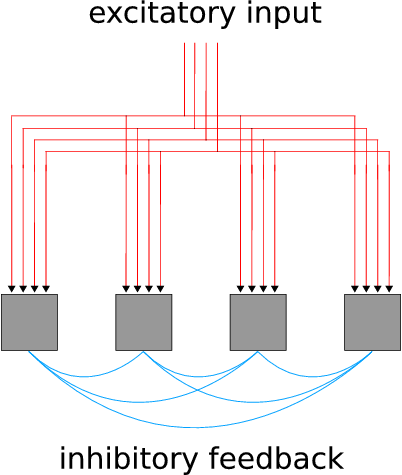

In a self-organizing winner-take-all (WTA) architecture, synaptic weights develop due to a specific unsupervised learning rule in such a way, that only a subset or a single neuron is active when a defined input is given to the network.

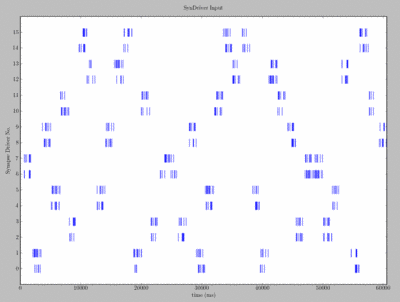

One unsupervised learning rule that enables the development of the WTA function is STDP.

After the learning procedure, the network can be used to discriminate between different classes of input signals. Every class is represented by the activity of a certain set of output neurons. As an application for the FACETS Stage 1 hardware, we try to set up a self-organizing winner-take-all network that maximizes its output entropy from a given set of classes of input signals.

An important property of winner-take-all architectures is their lateral inhibition or cross inhibition between neurons. This mutual inhibition induces competition in the network and provides for limited output rates. During the learning competition, the synapses of the 'winner' neuron(s) to the corresponding input(s) are strengthened, whereas the output of other neurons is suppressed through lateral inhibition.

As it is believed that assumed building blocks like hypercolumns in the visual cortex can implement winner-take-all functions (see references below), it is of neuroscientific relevance to study the performance of this architecture.

Johansson C., Sandberg A. and Lansner A. (2002)

A neural network with hypercolumns.

In Proc. ICANN 2002, LNCS 2415. Madrid, Spain , pages 192-197.

Electronic Visions Group – Prof. Dr. Johannes Schemmel

Im Neuenheimer Feld 225a

69120 Heidelberg

Germany

phone: +49 6221 549849

fax: +49 6221 549839

email: schemmel(at)kip.uni-heidelberg.de

How to find us